Configure the PI extractor

To configure the PI extractor, you must create a configuration file. This file must be in YAML format. The configuration file is split into sections, each represented by a top-level entry in the YAML format. Subsections are nested under a section in the YAML format.

You can use either the sample complete or minimal configuration files included with the installer as a starting point for your configuration settings:

config.default.yml - This file contains all configuration options and descriptions.

config.minimal.yml - This file contains a minimum configuration and no descriptions.

You must name the configuration file config.yml.

You can set up extraction pipelines to use versioned extractor configuration files stored in the cloud.

Before you start

- Optionally, copy one of the sample files in the

configdirectory and rename it toconfig.yml. - The

config.minimal.ymlfile doesn't include a metrics section. Copy this section from the example below if the extractor is required to send metrics to a Prometheus Pushgateway. - Set up an extraction pipeline and note the external ID.

Minimal YAML configuration file

The YAML settings below contain valid PI extractor 2.1 configurations. The values wrapped in ${} are replaced with environment variables with that name. For example, ${COGNITE_PROJECT} will be replaced with the value of the environment variable called COGNITE_PROJECT.

The configuration file has a global parameter version, which holds the version of the configuration schema used in the configuration file. This document describes version 3 of the configuration schema.

version: 3

cognite:

project: '${COGNITE_PROJECT}'

idp-authentication:

tenant: ${COGNITE_TENANT_ID}

client-id: ${COGNITE_CLIENT_ID}

secret: ${COGNITE_CLIENT_SECRET}

scopes:

- ${COGNITE_SCOPE}

time-series:

external-id-prefix: 'pi:'

pi:

host: ${PI_HOST}

username: ${PI_USER}

password: ${PI_PASSWORD}

state-store:

database: LiteDb

location: 'state.db'

logger:

file:

level: 'information'

path: 'logs/log.log'

metrics:

push-gateways:

- host: 'https://prometheus-push.cognite.ai/'

username: ${PROMETHEUS_USER}

password: ${PROMETHEUS_PASSWORD}

job: ${PROMETHEUS_JOB}

where:

versionis the version of the configuration schema. Use version 3 to be compatible with the Cognite PI extractor 2.1.cogniteis the how the extractor reads the authentication details for PI (pi) and CDF (cognite) from environment variables. Since no host is specified in thecognitesection, the extractor uses the default value, https://api.cognitedata.com, and assumes that the PI server uses Windows authentication.time-seriesconfigures the extractor to create time series in CDF where the external IDs will be prefixed withpi:. You can also use a data set ID configuration to add all time series created by the extractor to a particular data set.state-storeconfigures the extractor to save the extraction state locally using a LiteDB database file namedstate.db.loggerconfigures the extractor to log at information level and outputs log messages to a log file in the logs/log.log directory. By default, new files are created daily and retained for 31 days. The date is appended to the file name.metricspoints to the Prometheus Pushgateway hosted by CDF. It assumes that a user has already been created. For the Pushgateway hosted by CDF, the job name(${PROMETHEUS_JOB})must start with the username followed by'-'.

Configure the PI extractor

Cognite

Include the cognite section to configure which CDF project the extractor will load data into and how to connect to the project. This section is mandatory and should always contain the project and authentication configuration.

| Parameter | Description |

|---|---|

project | Insert the CDF project name you want to ingest data into. This is a required value. |

api-key | We've deprecated API-key authentication and strongly encourage customers to migrate to authentication with IdP. |

host | Insert the base URL of the CDF project. The default value is https://api.cognitedata.com. |

idp-authentication | Insert the credentials for authenticating to CDF using an external identity provider. You must enter either an API key or use IdP authentication. |

extraction-pipeline | Insert the external ID of an extraction pipeline in CDF. You should create the extraction pipeline before you configure this section. |

metadata-targets | Configuration for targets for metadata, meaning assets, time series metadata, and relationships. |

metadata-targets/clean | Configuration for enabling writing to clean. Options:

|

metadata-targets/raw | Configuration for writing to CDF RAW. Options:

|

Identity provider (IdP) authentication

Include the idp-authentication section to enable the extractor to authenticate to CDF using an external identity provider, such as Azure AD.

| Parameter | Description |

|---|---|

client-id | Enter the client ID from the IdP. This is a required value. |

secret | Enter the client secret from the IdP. This is a required value. |

scopes | List the scopes. This is a required value. |

tenant | Enter the Azure tenant. This is a required value. |

authority | Insert the base URL of the authority. The default value is https://login.microsoftonline.com/ |

min-ttl | Insert the minimum time in seconds a token will be valid. The cached token is refreshed if it expires in less than min_ttl seconds. The default value is 30. |

CDF Retry policy

Include the cdf-retries subsection to configure the automatic retry policy used for requests to CDF. This subsection is optional. If you don't enter any values, the extractor uses the default values.

| Parameter | Description |

|---|---|

timeout | Specify the timeout in milliseconds for each individual try. The default value is 80000. |

max-retries | Enter the maximum number of retries. If you enter a negative value, the extractor keeps retrying. The default value is 5. |

max-delay | Enter the maximum delay in milliseconds between each try. The base delay is calculated according to 125*2^retry ms. If this value is less than 0, there is no maximum. 0 means that there is never any delay. The default value is 5000. |

CDF Chunking

Include the cdf-chunking subsection to set up the rules you can configure to optimize the number of requests against CDF endpoints. This subsection is optional. If you don't enter any values, the extractor uses the default values based on CDF's current limits.

If you increase the default values, requests may fail due to limits in CDF.

| Parameter | Description |

|---|---|

time-series | Enter the maximum number of time series per get/create time series request. The default value is 1000. |

data-point-time-series | Enter the maximum number of time series per data point create request. The default value is 10000. |

data-points | Enter the maximum number of ingested data points within a single request to CDF. The default value is 100000. |

data-point-delete | Enter the maximum number of ranges per delete data points request. The default value is 1000. |

data-point-list | Enter the maximum number of time series per data point read request, used when getting the first point in a time series. The default value is 100. |

data-point-latest | Enter the maximum number of time series per data point read latest request, used when getting the last point in a time series. The default value is 100. |

raw-rows | Enter the maximum number of rows per request to CDF RAW. This is used with raw state-store. The default value is 10000. |

events | Enter the maximum number of events per get/create events request. This is used for logging extractor incidents as CDF events. The default value is 1000. |

CDF Throttling

Include the cdf-throttling subsection to configure how requests to CDF should be throttled. This subsection is optional. If you don't enter any values, the extractor uses the default values.

| Parameter | Description |

|---|---|

time-series | Enter the maximum number of parallel requests per time series operation. The default value is 20. |

data-points | Enter the maximum number of parallel requests per data points operation. The default value is 10. |

raw | Enter the maximum number of parallel requests per raw operation. The default value is 10. |

ranges | Enter the maximum number of parallel requests per first/last data point operation. The default value is 20. |

events | Enter the maximum number of parallel requests per events operation. The default value is 20. |

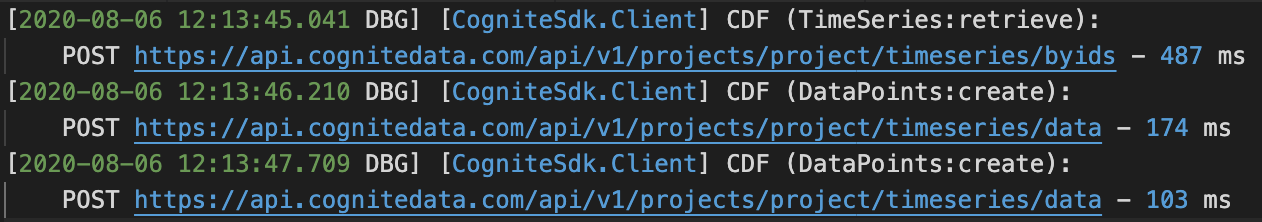

SDK Logging

Include the sdk-logging subsection to enable and disable output log messages from the Cognite SDK. The extractor is built using Cognite’s .NET SDK. If you enable this logging, the extractor displays any logs produced by the SDK. This configuration is optional. To disable logging from the SDK, exclude this section or set disabled to true.

| Parameter | Description |

|---|---|

disable | Set to true to disable logging from the SDK. The default value is false. |

level | Enter the minimum level of logging, either trace, debug, information, warning, error, critical, none. The default value is debug. |

format | Select the format of the log message. The default value is CDF ({Message}): {HttpMethod} {Url} - {Elapsed} ms. |

If this section is configured, the additional logs are mainly details about requests to CDF, with the following default format:

Time series

Include the time-series section for configurations related to the time series ingested by the extractor. This section is optional. If you don't enter any values, the extractor uses the default values.

| Parameter | Description |

|---|---|

external-id-prefix | Enter the external ID prefix to identify the time series in CDF. Leave empty for no prefix. The external ID in CDF is used as this optional prefix, followed by the PI Point name or PI Point ID. The default value is ““. |

external-id-source | Enter the source of the external ID. This is either Name or SourceId. By default, the PI Point name is used as the source. If SourceId is selected, then the PI Point ID is used. The default value is name. |

data-set-id | Specify the data set to assign to all time series controlled by the extractor, both new and current time series sourced by the extractor. If you don't configure this, the extractor doesn't change the current time series' data set. The data set is specified with the internal ID (long). The default value is null/empty. |

This is an example time series configuration:

time-series:

external-id-prefix: 'pi:'

external-id-source: 'SourceId'

data-set-id: 1234567890123456

This configures the extractor to create time series in CDF, as shown below. The time series' external ID is created by appending the PI Point ID 12345 to the configured prefix pi::

{

'externalId': 'pi:12345',

'name': 'PI-Point-Name',

'isString': false,

'isStep': false,

'dataSetId': 1234567890123456,

}

Events

Include the events section for configurations related to events in CDF. This section is optional. If configured and store-extractor-events-interval is greater than zero, the PI extractor creates events for extractor reconnection and PI data pipe loss incidents. Reconnection and data loss may cause the extractor to miss some historical data point updates. Logging these as events in CDF provides a way of inspecting data quality. When combined with the PI replace utility, you can use these events to correct data inconsistencies in CDF.

| Parameter | Description |

|---|---|

source | Events have this value as the event source in CDF. The default value is ““. |

external-id-prefix | Insert an external ID prefix to identify the events in CDF. Leave empty for no prefix. The default value is ““. |

data-set-id | Select a data set to assign to all events created by the extractor. We recommend using the same data set ID used in the Time series section. The default value is null/empty. |

store-extractor-events-interval | Store extractor events in CDF with this interval, given in seconds. If this value is less than zero, no events are created in CDF. The default value is -1. |

This is an example events configuration:

events:

source: 'PiExtractor'

external-id-prefix: 'pi-events:'

data-set-id: 1234567890123456

store-extractor-events-interval: 10

With this configuration, the extractor sends data loss/reconnection events to CDF every 10 seconds. The created events are similar to the one below. The external ID is created by concatenating source + event date and time + event count, and prefixing it with the configured pi-events: prefix. The type and subtype describe the incident and the cause of the event. This example is a data loss incident caused by a PI data pipe overflow:

{

'externalId': 'pi-events:PiExtractor-2020-08-04 01:01:21.395(0)',

'startTime': 1596502850668,

'endTime': 1596502880393,

'type': 'DataLoss',

'subtype': 'DataPipeOverflow',

'source': 'PiExtractor',

}

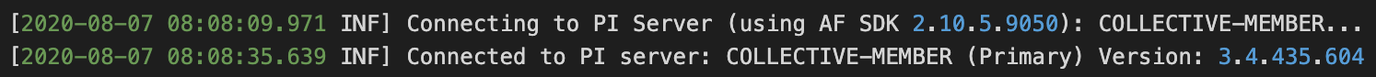

PI

Connect the extractor to a particular PI server or a PI collective. If you configure the extractor with a PI collective, the extractor will transparently maintain a connection to one of the active servers in the collective. The default settings provide Active Directory authorization to the PI host when the service account for the Windows service is authorized.

| Parameter | Description |

|---|---|

host | Enter the hostname or IP address of the PI server or the PI collective name. If the host is a collective member name, connect to that member. The default value is null/empty. This is a required value. |

username | Enter the username to use for authentication. Leave this empty if the Windows service account under which the extractor runs is authorized in Active Directory to access the PI host. The default value is null/empty. |

password | Enter the password for the user-provided username, if any. The default value is null/empty. |

native-authentication | Determines whether the extractor will use the native PI authentication or Windows authentication. The default value is false, which indicates Windows authentication. |

parallelism | Insert the number of parallel requests to the PI server. If backfill-parallelism is defined, it excludes backfill requests. The default value is 1. |

backfill-parallelism | Insert the number of parallel requests to the PI server for backfills. This allows the separate throttling of backfills. The default value is 0. |

If you've configured a PI host to use a PI Server or Collective server, the extractor connects to that server and logs the server version and the OSIsoft AF SDK version. If you've configured a host as a collective member, the log output shows if it is a primary or secondary member in the collective:

Metrics

These are configurations related to sending extractor metrics to Prometheus. This section is optional, but we recommend using a Pushgateway to collect extractor metrics to monitor the extractor's health and performance.

Server

Include the server subsection to define an HTTP server embedded in the extractor from which Prometheus can scrape metrics. This subsection is optional.

| Parameter | Description |

|---|---|

host | Start a metrics server in the extractor with this host for Prometheus scrape. Example: localhost, without scheme and port. The default value is null/empty. |

port | Enter the server port. The default value is null/empty. |

Pushgateways

Include the push-gateways subsection to describe an array of Prometheus pushgateway destinations to which the extractor will push metrics. This subsection is optional.

| Parameter | Description |

|---|---|

host | Insert the absolute URL of the Pushgateway host. Example: http://localhost:9091. If you are using Cognite’s Pushgateway, this is https://prometheus-push.cognite.ai/. The default value is null/empty. |

job | Enter the job name. If you are using Cognite’s Pushgateway, this starts with username followed by -. For instance, if username is cog, a valid job name would be cog-pi-extractor. Otherwise, this can be any job name. The default value is null/empty. |

username | Enter the user name in the Pushgateway. The default value is null/empty. |

password | Enter the password. The default value is null/empty. |

If you configure this section, the extractor pushes metrics that can be displayed, for instance, in Grafana. Create Grafana dashboards with the extractor metrics using Prometheus as data source.

State storage

Include the state-storage section to configure the extractor to save the extraction state periodically. Then, the extraction resumes faster in the next run. This section is optional. If not present, or if database is set to none, the extraction state is restored by querying the timestamps of the first and the last data points for each time series.

Querying the first and last data points in each time series may take more time than using a state store.

| Parameter | Description |

|---|---|

database | Select which type of database to use, either None, Raw, or LiteDb. Raw saves the extraction state in CDF RAW.LiteDb uses LiteDB to create a local database file with the state.None. |

location | Insert the path to the .db file used by the state storage or name of the CDF RAW table. The default value is null/empty. |

interval | Enter the time in seconds between each write to the extraction state store. If zero or less, the state is not saved periodically. The default value is 10. |

time-series-table-name | Enter the collection name in LiteDb or the table in CDF RAW to store the extracted time series ranges. The default value is ranges. |

extractor-table-name | Enter the collection name in LiteDb or the table in CDF RAW to store the extractor state: last heartbeat timestamp and last meta-data update timestamp. The default value is extractor. |

If CDF RAW is used, you can see the extracted states under Manage staged data in CDF.

Extractor

The extractor section contains various configurations for the operation of the extractor itself. If you want to extract a subset of the PI time series, specify the list of time series to be extracted in this subsection. This is how the list is created:

- If

include-tags,include-prefixes, andinclude-patternsare not empty, start with the union of these three. Otherwise, start with all points. - Remove all as specified by

exclude-tags,exclude-prefixes, andexclude-patterns.

| Parameter | Description |

|---|---|

time-series-update-interval | Set an interval in seconds between each time series update, discovering new time series in PI and synchronizing metadata from PI to CDF. The default value is 86400 (24 hours). |

include-tags | List the tags to include to retrieve time series with a name matching exactly this tag (case sensitive). |

include-prefixes | List the prefixes to include to retrieve time series with names starting with this prefix. |

include-patterns | List the patterns to include to retrieve time series with names containing the pattern. |

exclude-tags | List the tags to exclude. |

exclude-prefixes | List the prefixes to exclude. |

exclude-patterns | List the patterns to exclude. |

Delete time series

Include the deleted-time-series subsection to configure how the extractor handles time series that exist in CDF but not in PI. This subsection is optional, and the default behavior is none (do nothing).

This only affects time series with the same data set ID and external ID prefix as the time series configured in the extractor.

To find time series that exist in CDF but not in PI, the extractor:

- Lists all time series in CDF that have the configured external ID prefix and data set ID.

- Filters the time series using the include/exclude rules defined in the extractor section.

- Matches the result against the time series obtained from the PI Server after filtering these using the include/exclude rules.

| Parameter | Description |

|---|---|

behavior | Select the action taken by the extractor, either none, flag, or delete. Setting this to flag will perform soft deletion: Flag the time series as deleted but don't remove them from the destination. Setting it to delete will delete the time series from CDF. The default value is none, which indicates ignore.If you set to delete, the time series in CDF that cannot be found in PI will be permanently deleted from CDF. |

flag-name | If you've set behavior to flag, use this string as the flag name to mark the time series as deleted. In CDF, metadata is added to the time series with this as the key and the current time as the value. The default value is deletedByExtractor. |

If you don't configure an external-id-prefix, the extractor may find time series in CDF with no external ID. Even if these time series belong to the same data set, they couldn't be created by the extractor, as the extractor always assigns an external ID when creating new time series. In this case, the time series won't be deleted.

Backfill

Include the backfill section to configure how the extractor fills in historical data back in time with respect to the first data point in CDF. The backfill process completes when all the data points in the PI Data Archive are sent to CDF or when the extractor reaches the target timestamp for all time series if the to parameter is set.

| Parameter | Description |

|---|---|

skip | Set to true to disable backfill. The default value is false. |

step-size-hours | The step, in whole number of hours. Set to 0 to freely backfill all time series. Each iteration of backfill will backfill all time series to the next step before stepping further backward. The default value is 168. |

to | The target CDF timestamp at which to stop the backfill. The backfill may overshoot this timestamp. The overshoot will be less with smaller data point chunk size. The default value is 0 (backfill all time series to epoch). |

Frontfill

Include the frontfill section to configure how the extractor fills in historical data forward in time with respect to the last data point in CDF. At startup, the extractor fills in the gap between the last data point in CDF and the last data point in PI by querying the archived data in the PI Data Archive. After that, the extractor only receives data streamed through the PI Data Pipe. These are real-time changes made to the time series in PI before archiving.

When data points are archived in PI, they may be subject to compression, reducing the total amount of data points in a time series. Therefore, the backfill and frontfill tasks will receive data points after compression, while the streaming task will receive data points before compression. Learn more about compression in this video.

| Parameter | Description |

|---|---|

skip | Set to true to disable frontfill and streaming. The default value is false. |

streaming-interval | Insert interval in seconds between each call to the PI Data Pipe to fetch new data. The default value is 1. If you set this parameter to a high value, there is a higher chance of having a client outbound queue overflow in the PI server. Overflow may cause loss of data points in CDF. |

delete-data-points | If you set to true, the corresponding data points are deleted in CDF when data point deletion events are received in the PI Data Pipe. The default value is false.Enabling this parameter may increase the streaming latency of the extractor since the extractor verifies the data point deletion before proceeding with the insertions. |

use-data-pipe | Older PI servers may not support data pipes. If that's the case, set this value to false to disable data pipe streaming. The frontfiller task will run constantly and will frequently query the PI Data Archive for new data points. The default value is true. |

Logger

Include the logger section to set up logging to a console and files.

File

Include the file subsection to enable logging to a file. This subsection is optional. If level is invalid, logging to file is disabled.

| Parameter | Description |

|---|---|

level | Select the verbosity level for file logging. Valid options, in decreasing level of verbosity, verbose, debug, information, warning, error, fatal. The default value is null/empty. |

path | Insert the path relative to the install directory or current working directory when using multiple services. Example: logs/log.txt will create log files with a log prefix followed by a date as the suffix and txt as the extension in the logs folder. The default value is null/empty. |

retention-limit | Specify the maximum number of log files that will be retained in the log folder. The default value is 31. |

rolling-interval | Specify a rolling interval for log files as either day or hour. The default value is day. |

Console

Include the console subsection to enable logging events to a standard output, such as a terminal window. This subsection is optional. If level is invalid, console is disabled.

If the extractor is run as a service, logging to a console will have no effect.

| Parameter | Description |

|---|---|

level | Select the verbosity level for console logging. Valid options, in decreasing level of verbosity, are verbose, debug, information, warning, error, fatal. The default value is null/empty. |